December 17, 2024

bumpers: safety guardrails for AI agents

As AI agents become more capable—iteratively reasoning, calling APIs, generating code, and producing complex outputs—the need for robust governance and safety measures is paramount. Bumpers is a system of safety guardrails designed specifically for AI agents. Bumpers intercepts and validates an agent's decisions at multiple points during its reasoning cycle, ensuring alignment, preventing unwanted behaviors, and delivering secure, reliable outcomes.

Table of Contents

1. How We Built It

Bumpers is composed of modular components designed for extensibility and performance:

Core Validation Engine (engine.py)

A pipeline that runs validators at specified validation points. Each validator implements a validate(context) method and returns a ValidationResult. If validation fails, the engine triggers interventions, such as blocking an action or requesting the agent to try again.

Validators and Policies (parser.py, validators/)

We define validators like ActionWhitelistValidator and ContentFilterValidator. For example:

Policies (in YAML) map these validators to validation points, enabling quick configuration changes without code rewrites.

Logging and Analysis (file_logger.py, analyzer.py)

Every validation event is logged as structured JSONL. Tools like the BumpersAnalyzer compute statistics on failed validations and interventions, helping developers refine their policies over time.

Agent Integration (react.py)

Bumpers hooks into an agent's query loop. Before the agent executes a selected action, we run validation_engine.validate(ValidationPoint.PRE_ACTION, ...). If the action fails validation, we block it and prompt the agent for an alternative step. Similar checks occur before producing outputs.

2. Inspiration and How We're Different

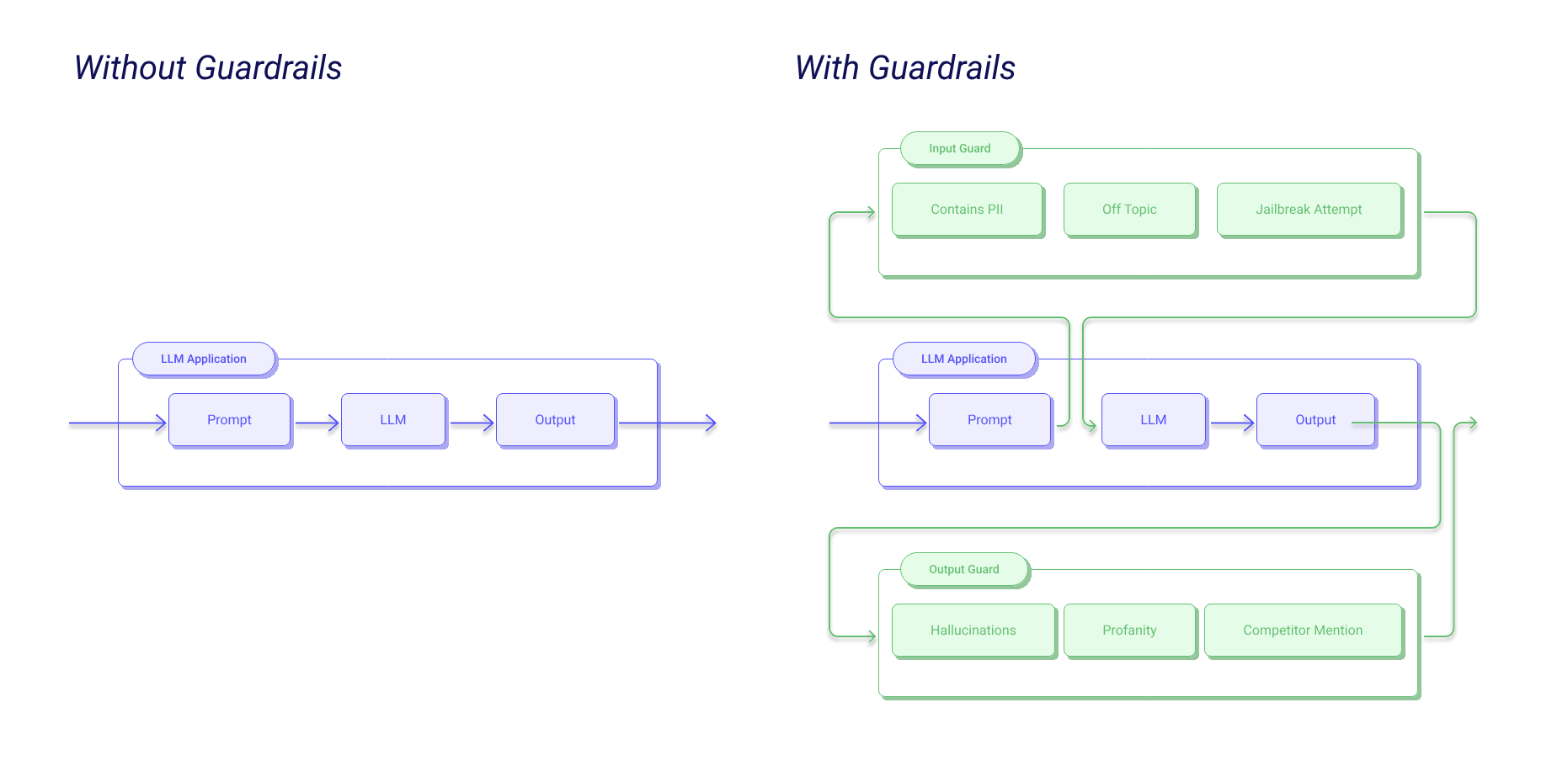

Bumpers was inspired by the growing community around AI safety and reliability tools. In particular, we drew inspiration from projects like Guardrails, which helped pioneer the concept of validating LLM outputs through schema enforcement, validators, and a modular, extensible framework. Guardrails made it easier for developers to integrate safety checks into their LLM prompt-response workflows, ensuring outputs stayed within defined boundaries.

While Guardrails focuses on input/output validation for LLM calls and structured data generation, Bumpers takes the next logical step: we apply these safety checks not just at the prompt-response level, but at every stage of an AI agent's reasoning cycle. Agents, unlike single-turn prompts, are complex orchestration engines that plan actions, call tools, and maintain evolving state over multiple steps. This complexity demands a system that can:

- Intercept Actions and Observations: In addition to validating final outputs, Bumpers inspects the agent's intermediate steps—like selecting which API to call or interpreting a tool's result—to ensure compliance and correctness throughout the process.

- Adapt to Agent-Specific Context: Unlike a single LLM response, an agent's chain-of-thought and actions are dynamic. Bumpers provides a flexible pipeline to apply different validators at multiple validation points (e.g.,

PRE_ACTION,PRE_OUTPUT), enabling a fine-grained approach to enforcing policies. - Integrate with Fast Inference Backends: Bumpers doesn't just validate textual output. We leverage Cerebras' fast inference to run real-time, semantic drift checks or even adapt agent behavior mid-execution without slowing down the experience. This focus on low latency and performance is essential for production-grade AI applications.

- Enhanced Observability and Monitoring: While Guardrails provides core validation primitives, Bumpers offers a comprehensive monitoring layer. Our analytics, interventions logging, and alert conditions help teams not only ensure compliance but also understand long-term patterns, improve policies, and prevent recurring issues.

In essence, Bumpers builds upon the foundational ideas in Guardrails—like modular validators, schemata, and prompt-to-validation pipelines—and extends them into the multi-step, multi-tool world of AI agents. By doing so, we deliver a holistic, real-time guardrail system that ensures reliability, safety, and alignment from the very first thought an agent has to its final answer.

3. Leveraging Cerebras' Fast Inference

Integrating with Cerebras' inference engine supercharges Bumpers' real-time validations. Traditionally, injecting additional validation layers into an agent's loop could introduce latency. By offloading semantic checks and LLM-based reasoning validations to Cerebras' hardware-accelerated inference endpoint, we achieve:

- Instantaneous Embedding Checks: The semantic drift validator uses embeddings to ensure the agent's answer doesn't stray from the user's original goal. With Cerebras' fast inference, generating embeddings and calculating similarity scores is near-instant.

- On-the-Fly Policy Enforcement: As soon as an action is proposed, Cerebras-backed LLM calls can classify or refine the response within milliseconds, preserving responsiveness even in complex multi-turn dialogues.

For example, if Bumpers detects a high risk of semantic drift, it can immediately consult a Cerebras model to re-check the content, all without significantly delaying the agent's flow.

4. Usage

Bumpers is designed to integrate seamlessly with popular agent frameworks like LangChain. Below is an example showing how to add safety guardrails to a LangChain agent using Bumpers' validation engine and callbacks.

Basic Integration

Start by creating a validation engine, configuring validators, and wrapping your LangChain agent with Bumpers' callback handler. This example shows how to:

- Set up action whitelisting to control which tools an agent can use

- Add content filtering to prevent sensitive data leaks

- Configure different failure strategies for various scenarios

Advanced Usage

For production deployments, you can add custom validators, configure detailed logging, and set up monitoring alerts. Bumpers' validation engine supports multiple validation points and flexible intervention strategies to handle various failure scenarios.

5. Future Roadmap

Our vision is to expand Bumpers into a universal guardrails framework for all manner of agents and deployment scenarios:

Framework Integrations

We plan to add native support for popular agent frameworks like LangChain, ControlFlow, and future orchestrators.

Enhanced Semantic Oversight

Beyond just semantic drift, we’ll integrate vision-language models (VLMs) and advanced classifiers. For example, if using multimodal agents (like Google's rumored Mariner agent), Bumpers could ensure image-based reasoning steps remain compliant.

Dynamic Policy Adaptation

We'll incorporate adaptive policies that learn from historical logs and adjust automatically to changing requirements.

Broader Tooling & Ecosystem

We plan to build dashboards for real-time visualization of agent behavior, integration with CI/CD pipelines for compliance checks, and possibly a "coach" LLM that suggests policy improvements.